Selfishly, I’ve wanted to write on this topic for some time. Mostly because I’ve found myself lost in the forest of abstraction that seems all too common in IT today. I remember seeing a tweet that said something to the effect, it’s scary how many layers of abstraction there is and amazing how we have to decipher all the different layers. There is no doubt this is a hot topic when it even comes to public cloud vendors and how they charge on their “pay for use” models. Or even customers with their internal clouds performing “charge-back” or “show-back” models to keep costs in check. Nonetheless, it’s important, when you move into a Hyper-Converged architecture, that you understand how capacity is utilized and where you can manage them through CLI or vCenter. This blog will be geared to both beginner and advanced vSphere administrators in a vSAN environment. This will include customers that have purchased a VxRail appliance since vSAN is the underlying storage technology that is used for that system. This will also focus on vSphere/vSAN/VxRail version 7. As there were many known issues in 6.x capacity reporting, specifically around the VM level views.

First, we need to cover some of the basics of vSAN before we start looking at capacity utilization. This will help explain future graphs we will take a look at. vSAN is a Hyper-Converged Infrastructure technology from VMware that takes local drives in your vSphere host and combines them into a single logical datastore. vSAN is a modern object-based storage system architected for vSphere. Usually, when people hear about object-based storage, they think of RESTful API’s and applications consuming storage directly. Fortunately, vSphere is directly consuming this object storage sub-system and presents this to you as the user for simple virtual machine storage (in reality, vSAN offers more than just VM storage, but we won’t’ focus on those features today.)

It is important to note the structure of a VM on vSAN. I’m going to touch on a high level of this, but there is a much deeper dive worth reading in the vSAN 6.7 Deep Dive book (no excuse not to buy this book! It’s FREE with an Amazon Kindle Unlimited plan.) Each VM has a set of objects that make up that VM. Those objects are virtual disk, VM home, VM swap, snapshot, and snapshot delta. Each of these objects is made up of components. The number of components is dictated by the storage policy that you give to that given object and how large that given object is. By default, a component can grow to a maximum size of 255GB. Often, the storage policy is inherited by the storage policy you gave to the entire VM, however it is possible to apply different storage policies to different virtual machine objects. The next thing we need to understand is how Storage-Policy Based Management works. Storage Policies are not exclusive to vSAN, however, vSAN exclusively uses Storage Policies. Storage Policies are a feature within vCenter that allows you to set storage configurations within vSphere. This utilizes VMware APIs for Storage Awareness (VASA) with 3rd party storage companies and products like vSAN to streamline storage management. Since we are discussing capacity in vSAN, we are going to focus on the failures to tolerate (FTT) setting in your storage policies.

Failures to Tolerate

FTT settings are where you configure your availability of data. The name of the setting is fairly straight forward. It is asking how many fault domain failures do you want to be able to withstand. Noticed I mentioned a fault domain and not a host. Because nodes can be grouped into fault domains. A common example of this is in a stretch cluster, where each site is a fault domain or when you create fault domains for each rack of nodes. If you have not configured stretch clusters or fault domains, Your fault domain is a vSphere/vSAN host. So when you configure a storage policy with an FTT setting of 1, you are telling vSAN to protect you from one node failure. If you were to give a storage policy an FTT setting of 2, you could handle two simultaneous node failures. This setting is easy to find as its the first setting you configure on a storage policy after selecting the “Enable Rules for vSAN.” To support certain FTT settings, you must have 2n+1=number of fault domains. So if I have FTT=1, the minimum fault domains I need are 2(1)+1=3 hosts.

Creating a storage policy is easy. Click on your Menu, go to Policies and Profiles, and click on Create VM Storage Policy. Notice that when you select different kinds of FTT settings there are two options, Mirroring and Erasure Coding. This is how those components are laid down across the cluster. While not precisely the same, you can compare these to RAID algorithms of old. Mirroring is a complete mirrored copy, similar to a RAID 1/10. Erasure Coding is similar to RAID5 and RAID6 stripes, where parity bits are calculated into the layout of the data. These also have their own host minimums for the stripe width. All of these settings affect raw storage capacity overhead, which is why we are diving deep into these subjects. Essentially, these FTT settings will effect the overall raw capacity used in your cluster. Here is a quick chart:

FTT=1(Mirroring): 200% overhead, so a 100GB VMDK would be 200GB. 3 host minimum, 4 recommended.

FTT=1(Erasure Coding): 133% overhead, 100GB VMDK would be 133GB. 4 host minimum, 5 recommended.

FTT=2(Mirroring): 300% overhead, 100GB VMDK would be 300GB. 5 host minimum.

FTT=2(Erasure Coding): 150% overhead, 100GB VMDK would be 150GB. 6 host minimum.

Monitoring vSAN Capacity Cluster Wide

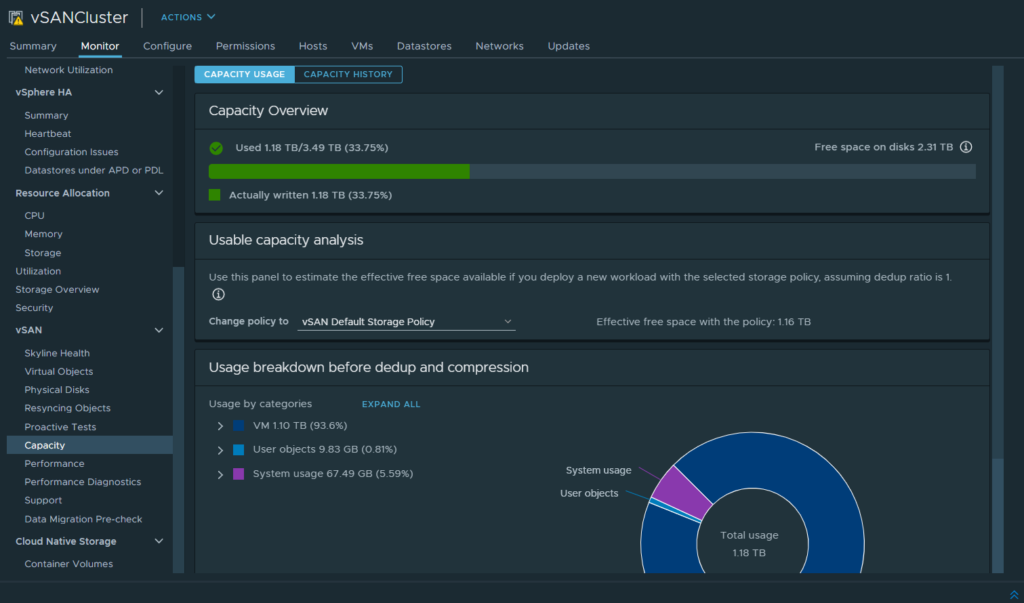

Now that we have the basics out of the way. Let’s start looking at capacity management in the vSphere web client. We can look at overall usage by click on the cluster and select “monitor.” Scroll down to the “vSAN” section. If we choose the “Capacity” section we can see some graphs about the capacity of the cluster.

The first one will be the overall capacity view. This will include every item in the vSAN datastore. That includes things like system usage, ISO’s you have uploaded, and all objects and their replica’s. This a good spot to understand your overall capacity. It is fairly common today to just show overall raw disk usage because most modern storage solutions are very dynamic in how they use capacity. As we had discussed earlier, a storage policy can be placed on the individual VM or object level. So there is no way to determine usable capacity since there are so many variations you can configure your vSAN virtual machines. Fortunately, since vSAN 6.7, there is a usable capacity calculator in the next session. This drop-down will list all your storage policies so you can determine how much usable space you would have left on that policy. The final section is where we will find the breakdown of what makes up your vSAN datastore. You can expand each section for further details.

Lets take an example of how capacity is shown before and after a storage policy change. All of my virtual machines are currently FTT=1(Mirroring) which you can see by looking at the VM’s tied to the storage policy.

When I look at the current capacity used for virtual disk (VMDK) storage on the Capacity screen I have used 1.2TB’s of storage. 608GB for primary components and 608GB for secondary. This aligns with that 200% overhead for a mirroring policy with an FTT setting of 1. Now, I’m going to change all of my virtual machines to an erasure coding policy. Note: This is generally not a best practice to change all your VM’s at once. Since I’m making a policy change that will end up in a resync which is an I/O operation. vSAN has several capabilities to limit these backend operations so they do not affect front end performance. However, it would be a best practice to do them in batches.

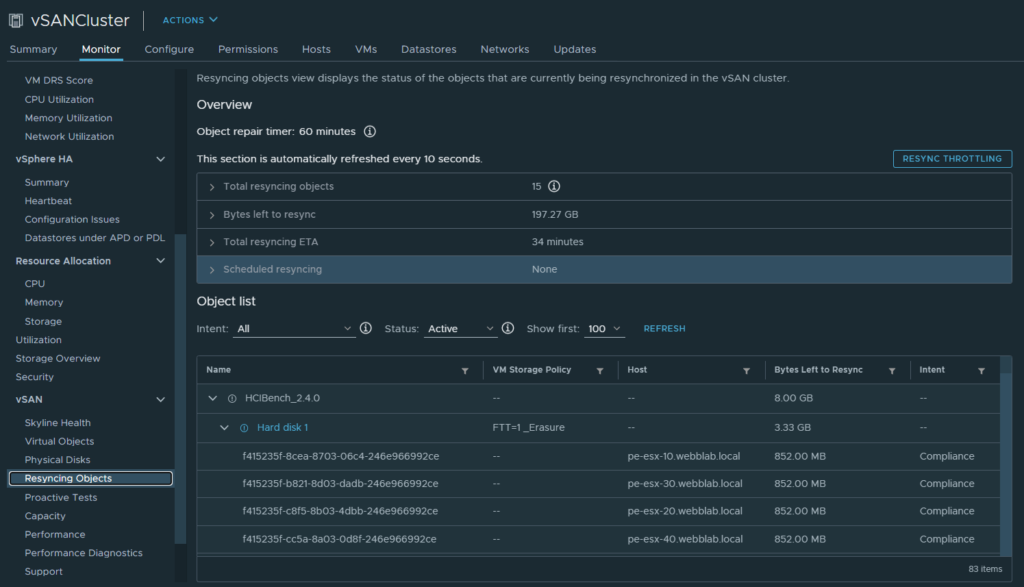

Now that we have changed the storage policies. We can monitor the progress of these components being reconfigured under the re-syncing components page.

Once our resync operation has completed. We can now see how this change has effected overall capacity in the cluster. We now have roughly that erasure coded 133% overhead, instead of the mirroring 200% overhead for replica’s.

Per-VM Level Views

There were a number of enhancements on how vCenter reports virtual machine usage. There is also a new view in vCenter that is a much-welcomed re-design for important information for a virtual machine. Let us take a look at the VM level view of one of my virtual machines.

We are going to focus on the capacity and usage part of this screen. My virtual machine is showing a used capacity of 128.16GB. This screen will show used capacity of the virtual machine, and not include replica’s like we see in the vSAN capacity view. Let’s see what PowerCLI shows us for this virtual machine (Credit to Marc Hamilton on the last command to do the math for used capacity.)

C:\Users\matt_webb> $SQLDBVM = Get-VMGuest -VM SQL-DB-01

C:\Users\matt_webb> $SQLDBVM

State IPAddress OSFullName

----- --------- ----------

Running {10.10.100.204, f... Microsoft Windows Server 2019 (64-bit)

C:\Users\matt_webb> $SQLDBVM.Disks

CapacityGB FreeSpaceGB Path

---------- ----------- ----

89.398 63.260 C:\

99.982 99.886 E:\

99.982 30.643 F:\

C:\Users\matt_webb> $SQLDBVM.Disks | select CapacityGB, FreeSpaceGB, Label,@{Name="UsedGB";Expression={[math]::round($_.CapacityGB - $_.FreeSpaceGB)}}

CapacityGB FreeSpaceGB Label UsedGB

---------- ----------- ----- ------

89.398433685302734375 63.25998687744140625 26

99.982418060302734375 99.88580322265625 0

99.982418060302734375 30.643489837646484375 69If we focus on the last command, you can see that the used capacity of the virtual machines OS is about 95GB. But what gives? The vCenter screen shows 128GB? This can happen for a number of reasons. Further investigation into my VM using Optimize-Volume in PowerShell shows that there are blocks that have been marked for deletion that need to be trimmed.

PS C:\Users\Administrator.WEBBLAB> Optimize-Volume -DriveLetter C,E,F -WhatIf -Verbose

VERBOSE: Invoking retrim on New Volume (E:)...

VERBOSE: Invoking retrim on New Volume (F:)...

VERBOSE: Invoking retrim on (C:)...

VERBOSE: Performing pass 1:

VERBOSE: Performing pass 1:

VERBOSE: Retrim: 0% complete...

VERBOSE: Retrim: 30% complete...

VERBOSE: Retrim: 0% complete...

VERBOSE: Retrim: 100% complete.

VERBOSE: Retrim: 31% complete...

VERBOSE: Retrim: 100% complete.

VERBOSE: Performing pass 1:

VERBOSE: Retrim: 32% complete...

VERBOSE:

Post Defragmentation Report:

VERBOSE: Retrim: 7% complete...

VERBOSE: Retrim: 44% complete...

VERBOSE:

Volume Information:

VERBOSE: Retrim: 57% complete...

VERBOSE: Volume size = 99.98 GB

VERBOSE: Cluster size = 4 KB

VERBOSE: Used space = 69.33 GB

VERBOSE: Free space = 30.64 GB

VERBOSE:

Retrim:

VERBOSE: Backed allocations = 99

VERBOSE: Allocations trimmed = 29

VERBOSE: Total space trimmed = 28.19 GBWe can see that there is about 28GB that needs to be trimmed. Which is what accounts for the discrepancy between the two sources of information.

How Maintenance Mode Affects Capacity

I couldn’t write a blog about capacity without discussing Maintenance Mode (MM.) One of the major differences between traditional storage and HCI is that we no longer do Redundant Array of Independent Disks (Otherwise known as RAID, the I is also referred to as inexpensive instead of independent.) We are moving to a redundant array of independent nodes (RAIN.) So if we were to take a node offline, that would affect overall capacity. This is why you will commonly hear about best practices of an N+1 node add for an HCI cluster to maintain a higher level of service level agreement. This allows a MM or failure scenario to rebuild data on another node. As we learned, the storage policy FTT setting determines the minimum number of nodes. We should take this calculation and add a node to allow an additional fault domain and (presumptively) storage for rebuild space. Since 6.7U3, you can now see how maintenance mode operations affect your overall capacity in a vSAN or VxRail cluster.

Let us cover briefly the 3 maintenance mode options when we decide to take a host into maintenance.

Full data migration: This removes all data from all vSAN disks off the host before going into maintenance mode

No data migration: This does nothing to the data and allows the host to enter maintenance mode. Data will be come inaccessible. If you have a virtual machine with a FTT setting of 0. It would lose acces to storage and go offline.

Ensure accessibility: This ensures that all VM’s would have access to data to stay online, however it allows a component that have a redundant partner to stay where it is and go offline. It also starts a repair delay timer, so that it rebuilds components after a certain amount of time (assuming you have the fault domain and space.)

Now that we understand the different maintenance mode operations. Let’s go to the pre-check and see what scenarios we can do. I’m going to run the pre-check on “Ensure accessibility” on one of my hosts.

As you can see in the output, I can enter into this maintenance mode operation, but I will have non-compliant virtual machines. In my circumstance with the virtual machines, we changed to FTT=1 erasure coding, which means I had one failure my cluster would go down. Now let’s do a pre-check on “Data migration.”

You probably guessed it already, but I’m not able to perform this action. That’s because we previously changed virtual machines on a 4-node cluster to an erasure coding policy. erasure coding requires 4 nodes, so there is no other fault domain to move the data to.

Wrap Up!

vSAN and VxRail can make storage infrastructure radically simple through automation and tight integration with existing VMware tools. Hopefully this blog helped you understand Hyper-Converged operations and capacity management more! If you have any question or comments, certainly reach out to me!