In IT, there is certainly no shortage of tools to use. This is usually amplified in our industry because of the rich and creative community of people that love to build things. However, when it comes to sizing for infrastructure, the toolset used to be very limited. This was the case early in my career. Most tools out in the ecosystem are geared towards the performance of a specific layer. Whether that be for a specific product, operating system, or hypervisor. In general, these tools were primarily for performance tuning of general performance monitoring. This would usually lead to cumbersome data collection. For example, I remember having to try and obtain 7GB SANHQ files for Equallogic!

So when you are looking to size for your next generation of infrastructure, Live Optics should be on your shortlist. Live Optics is an evolution of Dell’s DPACK (Dell Performance Collector Kit,) enhanced and developed to be a free SaaS offering for customers, partners, and vendors. While the tool is primarily used for its host-based collector (named Optical Prime,) Live Optics has also developed tools for other file or platform-specific collection including Dossier, CLARiiON/VNX, Isilon, Unity, VMAX, PowerMax, XtremIO, Hitachi, HPE 3PAR, IBM Storwize, NetApp, PowerStore, Pure, Avamar, NetWorker, NetBackup, Commvault, IBM TSM, Veeam, SQL Server and Data Domain.

What Makes Live Optics Different?

Live optics is not the only tool out there for infrastructure sizing. What I want to focus on are the basic factors of why Live Optics because my default choice for sizing. Keep in mind these points are mostly referring to Live Optics’ most common tool, Optical Prime. I’m also a big advocate of having more than one data source where time allows. So even if all of these points speak to you, always consider other data sources if that makes sense for your particular situation.

Simplicity: Running the tool as I outlined in my early blog is very efficient. Obtaining the collector, running it on a variety of sources, and getting it to the Live Optics Cloud for analysis is all streamlined and can be done with little overhead on your system administrators. A full list of supported operating systems is listed here.

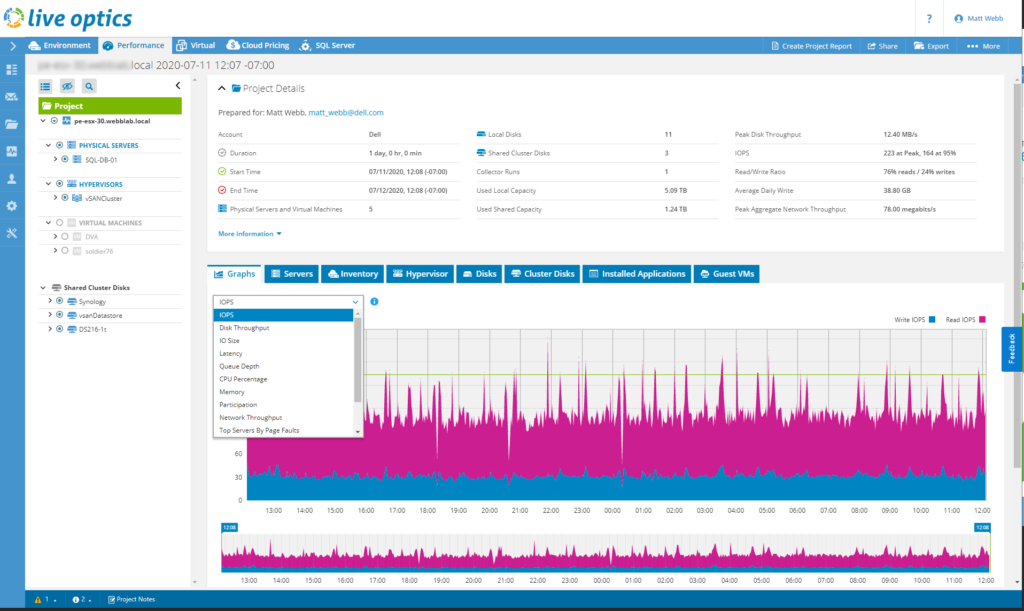

SaaS Platform: As mentioned earlier, Live Optics has evolved into a completely free software-as-a-service offering. When you run the collector, the default option is to have that data loaded on to the site for you to view (there is an option for manual collection if you have a “dark site”.) The software, running in a secure cloud, allows users to log in and view their projects dynamically. removing clusters, servers, or datastores that don’t need to be reviewed. Or drilling down to specific levels for a deeper review into a host or datastores performance. The inventory collection has had a massive amount of features added over the last year. Not only can you see your host configuration such as processor, total memory, model, and service tag. But you can export this information to excel (you can click on “export to excel” on any of the servers, inventory, hypervisor, disks, cluster disks, installed applications, or guest VMs tabs to get the same workbooks) which will give you two excel workbooks. The first one, in a “LiveOptics_project#_date.xlsx” format, will give you a detailed hardware view of GPU, Hypervisor, local and shared disks, VMs, Applications installed, and cloud pricing. A second file, in a “LiveOptics_project#_VMware_date.xlsx” format, will be available if you run the tool on a vCenter system. This excel workbook will give you a deep dive into VM metrics.

Performance Statistics: This is a big one! Most tools out there that are commonly used focus solely on inventory collection. I very common tool that is used in the VMware community is RVTools. This tool is also on my shortlist and I use it often (I’ll often use this tool as my second data source in more complex situations,) but its performance statistics are “point in time.” The performance statistics are especially rich in the storage area. While we do live in an age of all-flash storage systems, understanding an I/O profile is still essential. For example, in a VxRail system based on VMware vSAN, I have a plethora of SSD options. Do I go with Intel Optane drives for my cache tier? Do I consider low-cost SATA SSD’s for my capacity tier? These types of decisions can make a cost delta anywhere from a couple of hundred dollars to hundreds of thousands of dollars. With all that said, the CPU and memory statistics can not be minimized. Live Optics reports vCPU to pCPU aggregation as well as overall clock speed and CPU saturation. Memory is also reported in provisioned, active, and consumed statistics as well as if page swapping is occurring. You would not believe how many people find out interesting things about their environment from running this tool!

What is it Not?

Now I should spend a quick moment to add context to what it is not for. Especially, after I just spent the whole time building this tool up to seem to be the answer for world hunger. I think a good way to understand the primary purpose of the tool is to discuss what the original creators were in desperate need of before they came up with the idea to make the tool. This goes to the early days of iSCSI storage. Everyone was running expensive fibre channel storage for all of their workloads, 1GbE iSCSI solutions were just hitting the market. Making shared storage more affordable to more organizations. However, just like any other new technology, there were staunch fibre channel users that were resistant to the idea of putting storage on the IP stack. It was well known at that time, that if you wanted performance you needed to go with fibre channel. But what many people struggled to communicate was what their actual performance needs were. Many organizations that started to define their actual I/O profile found out they could run a good chunk of their workloads on iSCSI and put that money to other parts of their business. Early days of DPACK helped define the I/O profile for many customers and we saw iSCSI based arrays skyrocket in market share.

So, ultimately, Live Optics is meant for defining baseline criteria of what performance is needed for your infrastructure refresh or add-on. It is not meant to be a troubleshooting tool or long term monitoring tool. There are many tools like vRealize Operations, Solarwinds, and much more for those purposes. Those tools are intended to retain and analyze data over long periods. If you are having performance issues, sometimes Live Optics can help confirm a layer where performance is being hit. But by no means is it intended to get to a resolution of the problem. For example, I may find that you have excessive page swapping for a given virtual host. But it would be best to look at ESXTOP to determine what swapping is occurring and on what VM.

Wrap Up!

Data-driven decisions are what propel modern businesses to thrive. In IT, we are drowning in data and how to use it. With Live Optics, we can simplify this data to help make expansions or a refresh concise building proper criteria for what technology we search for.