Most people who know me are aware that my primary background in the tech field is storage. When I joined the vSAN team at VMware in 2017, it was during a period of rapid “hockey stick” growth, making it an incredibly exciting time. Hyper-Converged Infrastructure (HCI) was expanding fast, and there was never a dull moment.

One of the first things I had to master when joining the team was the math behind calculating usable storage capacity for vSAN. Without understanding this, estimating how many nodes a customer would need to meet their storage requirements would have been impossible. So, I thought I’d take some time to walk through the approach I use to make these calculations.

Before We Dive In—A Few Disclaimers

The method I outline here is one I’ve personally used (along with many others back in the day) to get a rough estimate. However, storage calculations have evolved with newer iterations of vSAN. Additionally, this guide is specifically for OSA (Original Storage Architecture)—while I believe it’s still applicable to ESA (Express Storage Architecture), I haven’t confirmed that firsthand.

For precise and official sizing, I recommend using the official vSAN Sizer tool, which you can find here: vSAN Sizer.

Lastly, all calculations in this post are in base 10, not binary format. With that out of the way, let’s get into the details.

Storage Policies

This isn’t intended to be a deep dive into storage policies—you can check out my earlier blog posts for that. However, I do want to clarify that storage policies are applied at the object level and can vary based on specific requirements.

Most customers use a single storage policy across all VMs, and my recommendation is to always choose the most efficient policy available based on your cluster size:

- 3 nodes → FTT=1 Mirroring (RAID-1)

- 4-5 nodes → FTT=1 Erasure Coding (RAID-5)

- 6-16 nodes → FTT=2 Erasure Coding (RAID-6)

Since some workloads might still require RAID-1, it’s important to understand that your storage policy mix directly impacts your overall capacity. Here are the overhead factors for each policy:

- FTT=1 Mirroring (RAID-1) → 2x overhead

- FTT=1 Erasure Coding (RAID-5) → 1.33x overhead

- FTT=2 Erasure Coding (RAID-6) → 1.5x overhead

Deduplication & Compression (DD/C)

Deduplication and compression are enabled by default for all AVS (Azure VMware Solution) clusters. The level of savings you achieve will vary depending on your workloads, but in the absence of specific data, I use 1.5x savings as a general rule of thumb.

This figure isn’t just based on “tribal knowledge” but is backed by data that the vSAN business unit gathered over time and shared with the field back in 2018.

Here’s what I’ve seen in real-world scenarios:

- Some customers only achieve 1.2x savings—so if you suspect that your environment doesn’t benefit much from DD/C, consider adjusting the estimate accordingly.

- Others achieve much higher savings—particularly with persistent VDI deployments that don’t use linked clones.

When in doubt, 1.5x is a safe assumption for rough sizing estimates.

Slack Space Considerations

Slack space is another important variable, and while it may seem like an outdated requirement, it remains relevant—particularly because it’s explicitly referenced in the Azure VMware Solution SLA. You can review the SLA here: Azure VMware SLA.

In my calculations, I always factor in a 25% slack space requirement to ensure compliance. Keep in mind that on-prem calculations may differ, but since this post focuses on AVS storage sizing, I won’t be covering those scenarios.

Breaking Down the Storage Calculation

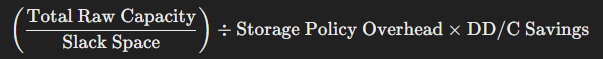

Now that we’ve covered the key variables, here’s the core formula for estimating usable storage capacity:

Let’s walk through an example cluster to determine its usable capacity.

Example 1: 8-Node AV36P Cluster

Each AV36P node has 19.2TB of raw capacity (you can find raw capacities for all node types here). To make calculations easier, we’ll use GB instead of TB.

(((19,200×8)÷1.25)÷1.5)×1.5=122,880 GB≈122 TB

Step-by-Step Breakdown:

- Multiply the raw capacity per node by the number of nodes → 19,200 × 8

- Subtract slack space overhead (25%) → divide by 1.25

- Subtract storage policy overhead (RAID-6 FTT=2, overhead of 1.5x) → divide by 1.5

- Account for deduplication & compression (1.5x savings) → multiply by 1.5

- Final usable storage → ~122TB

Example 2: 3-Node AV36 Cluster

Now, let’s calculate a minimum-size AV36 cluster. The AV36 has 15.2TB of raw storage per node.

(((15,200×3)÷1.25)÷2)×1.5=34,560 GB≈34.5 TB(((15,200 \times 3) \div 1.25) \div 2) \times 1.5 = 34,560 \text{ GB} \approx 34.5 \text{ TB}(((15,200×3)÷1.25)÷2)×1.5=34,560 GB≈34.5 TB

Here, we use RAID-1 (FTT=1 Mirroring), which has a 2x overhead instead of RAID-6.

Handling Mixed Node Configurations

With newer deployments often mixing AV36P and AV64 nodes, determining total usable storage across heterogeneous clusters can be complex.

To simplify this, I’ve built a Google Sheets tool and built a calculator in PHP on this site to help estimate storage across multiple node types. Keep in mind that this is not an official sizing tool—it should be used for Rough Order of Magnitude (ROM) estimates only.

I hope this guide helps you navigate AVS storage sizing with more confidence. If you have any questions or need a copy of the spreadsheet, feel free to reach out!