Intro

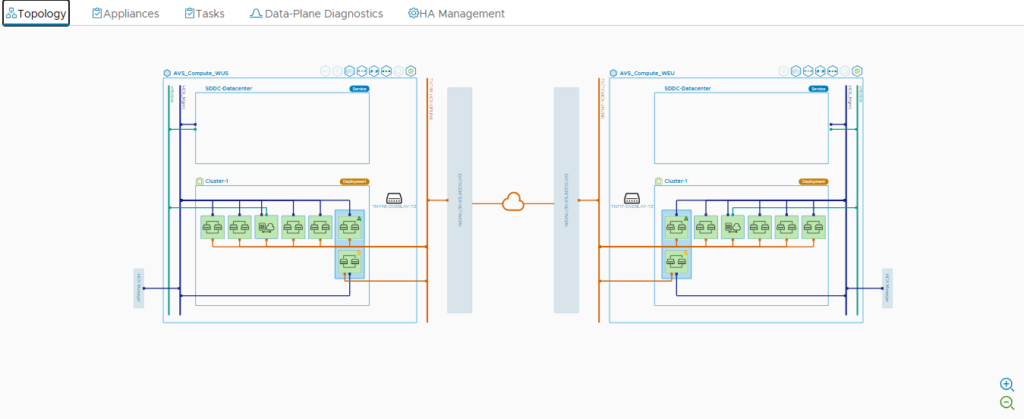

In my previous role as an AVS CSA, I collaborated with hundreds of customers to plan their HCX installations. Building on my previous post that explored the intricacies of an HCX Service Mesh and its various configurations, this blog post will take a more focused approach. We’ll surgically examine how to determine the necessary number of IP addresses for HCX Connectors and service mesh appliances. This is a frequently encountered planning task, as IT administrators must either request these IPs or, if managing their own IP space, ensure they are reserved within their IPAM tool. If you aren’t a big blog reader, first off, what are you doing here?! Second, I made a video over this topic on our AVS-Team YT Channel below.

The Easy Ones: HCX Connector and IX Appliance

Let’s begin with the simpler components to plan for: the HCX Connector and the Interconnect (IX) appliances. The HCX Connector is the most straightforward. There’s a direct 1:1 relationship between an HCX Connector and vCenter. Therefore, if you are migrating VMs from a single vCenter, you will typically deploy only one HCX Connector. While it’s technically possible to migrate VMs from vCenter B to vCenter A (which has an HCX Connector) in a linked-mode vCenter environment, network configurations and vSphere setups might complicate this approach. For simplicity and to ensure a smooth migration process, deploying an HCX Connector for each vCenter is generally the best practice.

Each HCX Connector requires only one IP address. So, the calculation is simple:

Number of HCX Connector IPs needed = Number of vCenters you plan to migrate VMs from.

Moving on to the IX appliance, the IP planning can be relatively simple, but it can vary based on the HCX services you intend to utilize. There are primarily two scenarios where you might need more than one IX appliance:

Scenario 1: You intend to use HCX vMotion, but your vMotion networks across vSphere clusters are isolated and cannot directly communicate. While rare, this situation can occur. Although vMotion networks are often non-routable for security reasons, vSphere administrators generally maintain consistency across clusters to facilitate migration flexibility.

Scenario 2: You plan to deploy multiple HCX Service Meshes to enhance parallel migrations or to segregate specific migration workloads.

It’s crucial to remember that there is only one IX appliance deployed per service mesh.

Typically, the IX appliance operates within multiple networks:

- Management Network (Common): This is the network that enables the IX appliance to communicate with your ESXi hosts.

- vMotion Network (Common): If you plan to leverage HCX vMotion, the IX appliance needs to access this network. If your vMotion network is routable to the management network, a separate IP might not be strictly necessary. However, as a best practice, reserving a dedicated IP for the vMotion network is advisable.

- Uplink (Uncommon): In scenarios where the management network has restricted outbound access, you might need to allocate an IP address in a network with outbound connectivity to the Azure VMware Solution /22 network. In most cases, routing to AVS exists, but firewall ports may require configuration.

- Replication (Uncommon): If you are a regular user of vSphere Replication (SRM), you might have configured a separate replication VMkernel (VMK) interface on your hosts to isolate replication traffic. This is distinct from SAN Replication networks. If unsure, examine a host’s VMK interfaces in your environment. If you don’t see a VMK specifically enabled for the replication service, replication traffic likely flows over the management interface.

In my experience, the most common setup involves the IX appliance having two interfaces: one in the management network and another in the vMotion network. This is because, in most environments, the management network can reach the destination HCX networks (AVS /22 network), and a separate replication network is not configured.

Therefore, as a general guideline:

IX appliance IPs for Management and vMotion = Number of service meshes required for your migration needs.

The Harder One: Network Extension Appliances

Now, let’s tackle Network Extension (NE) appliances, where the planning becomes more nuanced. It’s even possible you might not need NE appliances at all! This is because NE appliances are only deployed if you intend to use the Network Extension service. Network Extension is a highly valued feature of HCX, so it’s commonly encountered in deployments.

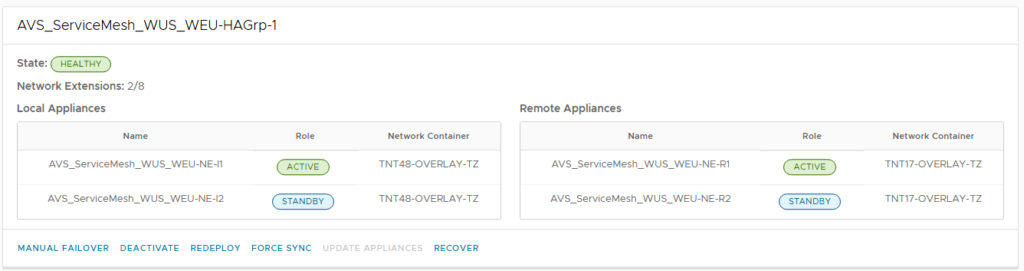

Unlike IX appliances, Network Extension appliances typically have a single interface, residing within your “Management network.” The exception is the “uplink” network scenario, as discussed in the IX section, but this is less frequent. So, the key question is: how many NE appliances will you need? To answer this, we must consider three critical factors:

- Network Containers: HCX uses “Network Containers” to categorize virtual networking objects, specifically Virtual Distributed Switches (VDS) and NSX-T Transport Zones (TZ). Crucially, a single NE appliance or HA pair can only be associated with one network container. Therefore, if you have multiple VDSs or TZs you intend to extend, you’ll require multiple NE appliances or pairs.

- Network Extension HA: Implementing Network Extension High Availability (HA) is a recommended best practice. NE HA uses network heartbeats for rapid traffic takeover, which is significantly faster than relying on vSphere HA to restart a VM upon a host failure. For HA, each NE appliance needs a peer appliance to connect to, effectively doubling the number of NE appliances required for HA pairs.

- Network Extension Performance and Maximums: Each NE appliance has inherent limitations. The primary limitation to consider is that an NE appliance can only extend 8 networks concurrently. If you need to extend more than 8 networks at the same time, you’ll need additional NE appliances to handle the concurrent extensions. Secondly, each NE appliance has a maximum bandwidth capacity. For precise, advertised maximums, consult broadcom.configmax.com. To monitor NE performance, observe the VM’s CPU utilization. Consistent CPU usage at 60-70% might indicate the NE is struggling to keep up with network demand, suggesting the need for an additional NE or NE pair.

With these factors in mind, how do we calculate the number of NE appliances? Here’s a general formula I’ve found useful:

(Total number of concurrent extensions / 8 (rounded up)) x Network HA Factor (2 if using HA, 1 if not) (Maximum of 10 total)

Currently, it’s my understanding that the maximum number of NE appliances supported per AVS SDDC is 10, constrained by the IP space within the AVS /22 network. If anyone has more up-to-date information on this, please let me know!

Some Final Thoughts

It’s a common misconception that service mesh appliances are manually deployed. Remember that HCX automatically deploys these appliances during service mesh creation through the interconnect screen. HCX deploys them to a vSphere cluster specified in your Compute Profile, and their IP addresses and port groups are determined by the Network Profile configurations. Also, remember that in Azure VMware Solution, HCX compute and network profiles are pre-configured for you. Therefore, this IP planning primarily applies to your on-premises environment. This planning exercise helps you determine how many IPs to reserve in your IPAM tool and which IP pools to configure in your Network Profile. Did I miss anything? Connect with me on LinkedIn!

Of Course I Had to Make a Tool

Leveraging the logic outlined above, I’ve developed a tool to assist you in calculating your IP needs. My ambitious goal is to expand this tool to generate a topology map, providing insights into total IPs, vCenter relationships, and relevant firewall rules. The tool can be found below, or you can find this tool and others I’ve built at my Pet Projects page.